-

Amazon S3 File Upload Api Conference카테고리 없음 2020. 3. 1. 23:08

Is a widely used public cloud storage system. S3 allows an object/file to be up to which is enough for most applications.

The AWS Management Console provides a Web-based interface for users to upload and manage files in S3 buckets. However, uploading a large files that is 100s of GB is not easy using the Web interface. From my experience, it fails frequently. There are various third party commercial tools that claims to help people upload large files to S3 and Amazon also provides a API which is most of these tools based on. While these tools are helpful, they are not free and AWS already provides users a pretty good tool for uploading large files to S3—the from Amazon. From my test, the aws s3 command line tool can achieve more than 7MB/s uploading speed in a shared 100Mbps, which should be good enough for many situations and network environments.

In this post, I will give a on uploading large files to Amazon S3 with the aws command line tool. Install aws CLI toolAssume that you already have Python environment set up on your computer. You can install aws tools using pip or using the bundled installer $ curl '-o 'awscli-bundle.zip'$ unzip awscli-bundle.zip$ sudo./awscli-bundle/install -i /usr/local/aws -b /usr/local/bin/aws. Try to run aws after installation. If you see output as follows, you should have installed it successfully.

$ awsusage: aws options. parametersTo see help text, you can run:aws helpaws helpaws helpaws: error: too few arguments Configure aws tool accessThe quickest way to configure the AWS CLI is to run the aws configure command: $ aws configureAWS Access Key ID: fooAWS Secret Access Key: barDefault region name us-west-2: us-west-2Default output format None: jsonHere, your AWS Access Key ID and AWS Secret Access Key can be found in on the AWS Console. Uploading large filesLastly, the fun comes. Here, assume we are uploading the large./150GB.data to s3://systut-data-test/storedir/ (that is, directory store-dir under bucket systut-data-test) and the bucket and directory are already created on S3.

The command is: $ aws s3 cp./150GB.data s3://systut-data-test/storedir/After it starts to upload the file, it will print the progress message like Completed 1 part(s) with. File(s) remainingat the beginning, and the progress message as follows when it is reaching the end.

Completed 9896 of 9896 part(s) with 1 file(s) remainingAfter it successfully uploads the file, it will print a message like upload:./150GB.data to s3://systut-data-test/storedir/150GB.dataaws has more commands to operate files on S3. I hope this tutorial helps you start with it. Check the for more details.

Amazon S3 File Upload Api Conference 2016

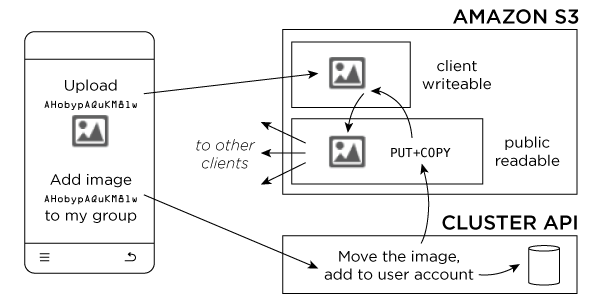

I need help on how to upload multiple files on Amazon S3. So basically I have three input fields for files upload, two inputs will take 10-20 pictures and last input is only one for one image and upload them to Amazon S3 when a form is submitted.The form that I'm using for uploading images:I have a bucket and everything, what I need is some kind of solution to upload multiple images to Amazon S3.I'm using PHP as my backend and for now, images are stored on hosting when a form is submitted. But I will have more then 150gb of images uploaded every month and I need S3 for hosting those images.When I connect the form with Amazon S3 and try to upload more than one image, I get this message 'POST requires exactly one file upload per request.'

S3 Uploading Objects

S3 is highly scalable and distributed storage.If you have those images locally in your machine you can simply useaws s3 sync localfolder s3://bucketname/cli takes cares of syncing the data.You can also configure how much parallelism you want on the cli with the configuration settings.You can also do this programmatically if that is going to be a continuous data movement.EDIT1:Only one file can be uploaded from the UI at one time.You can sequence them via javascript and upload one at a time.If you want to take it to the backend you can do so,Hope it helps.